Sharing Research

Communicating Lack of Findings

This is a project report I wrote for CS224N: Deep Learning for Natural Language Processing. My partner and I decided to choose a paper, to replicate its findings and to try to expand on its results with another new architecture type, the transformer (you can also read my literature review and watch my explainer video on transformers).

The main challenge of our project was that we failed to improve upon the results of the paper we chose, so we had no novel findings to report. Rather, we had to focus on what we did, what the problem was and why it ultimately failed. When we talked with the professors and the TAs, we realized that it made sense that we couldn’t beat the reference paper as the limitations transformers are still very poorly understood, and we were working on the edge of their possible use cases. Nonetheless, we had never read any AI academic paper that did not contribute to the field in the form of a new state-of-the-art model, so writing a paper whose contribution was initiatives that didn’t work was very challenging from a science communication perspective.

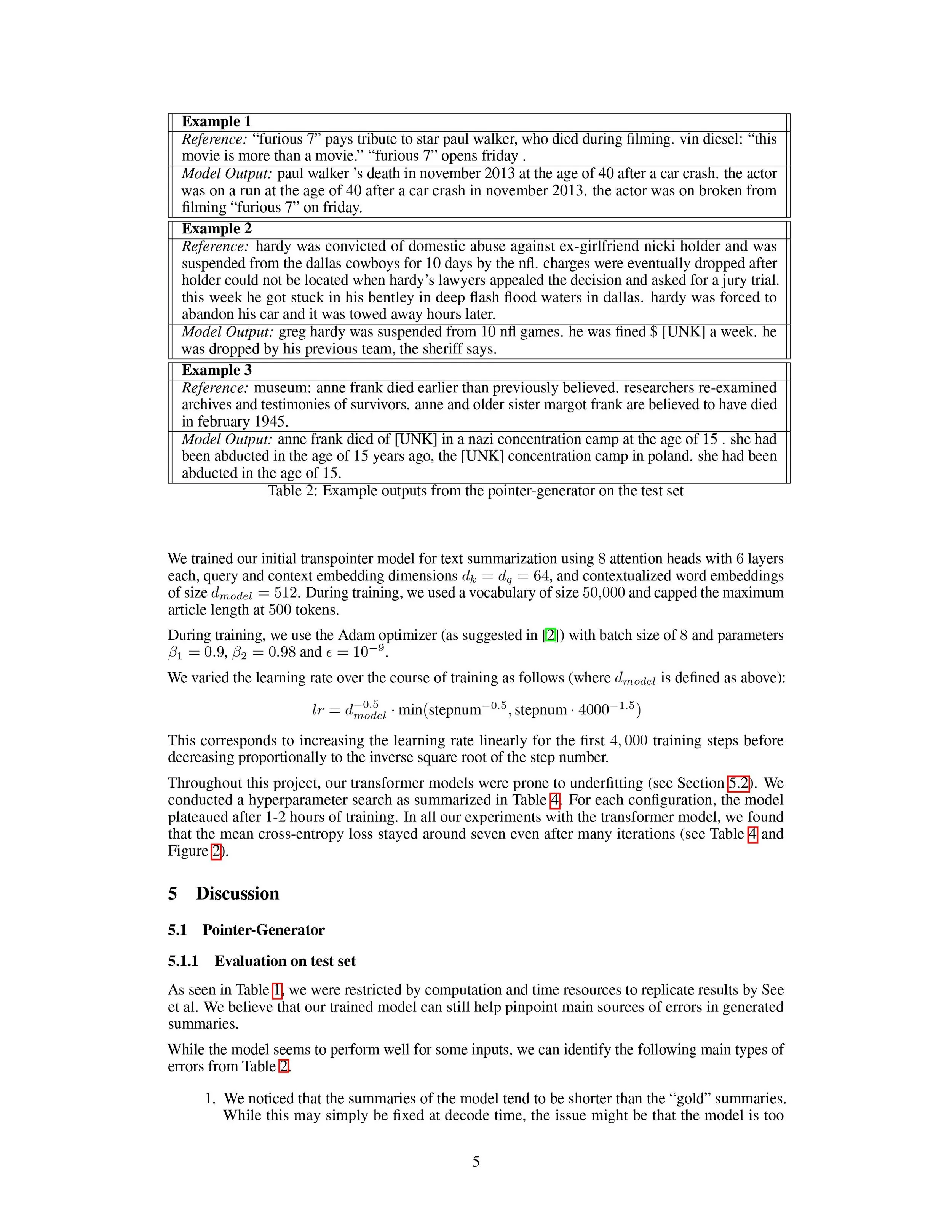

Throughout our paper, we focused on the overarching message of our work: “In news article summarization, the Transformer as it is applied to other Natural Language Processing tasks is not effective.” We made sure to explain the steps we took, and tried our best to analyze and assess why and how our models fell short. We had to spend more time on troubleshooting our model’s errors, and less time on just reporting metrics.

Overall, I found this report to be a very formative piece to write. I felt like I learned a lot and I am proud of the final product that we produced. I hope that maybe our failed attempts and improvement ideas will help the next person who tackles this task using transformers.

This project was completed in collaboration with Vrinda Vasavada.

Cover photo by Kelly Sikkema on Unsplash